By 2028, enterprises with revenues above $1 billion will use on average ten different GRC software products, up from eight in 2025, according to Gartner’s Market Guide for AI Governance Platforms (2025).

Teams are being pushed to use AI faster than their controls can keep up. Vendors supply the models, data, and tools, but when something goes wrong, a data issue, a legal breach, or a public trust failure, the responsibility sits with the organization, not the vendor.

Most risk programs were built for static software, not systems that change how they behave. That gap leaves leaders exposed without clear ownership or oversight.

A governance-first approach fixes this by putting accountability in place before AI is deployed, not after the damage is done.

Key Takeaways

- AI third-party risk requires governance from the start: When vendors supply AI models and data, organizations remain accountable for outcomes, compliance, and trust.

- Governance-first TPRM defines control before scale: Clear policies, ownership, and oversight ensure AI systems behave as intended across vendor ecosystems.

- Traditional vendor risk programs are not enough for AI: Dynamic models, opaque data sources, and evolving behavior demand continuous monitoring and AI-specific controls.

- Strong governance enables confident AI adoption: With the right structures in place, organizations can scale third-party AI while managing risk, compliance, and accountability.

What is the Governance-First Approach?

A governance-first approach puts structure before scale. It defines policies, ownership, and controls before data, analytics, or AI systems are rolled out across the organization. Instead of fixing gaps after deployment, it sets expectations upfront for security, ethics, and regulatory compliance.

This approach ensures innovation moves forward with clarity and accountability while supporting data privacy governance, ethical AI assurance, and long-term risk mitigation strategy across third-party relationships.

What Is Third-Party Risk Management?

Third-Party Risk Management (TPRM) is the strategic process of identifying, assessing, monitoring, and mitigating risks from external partners (vendors, suppliers, contractors) that can impact an organization's data, finances, operations, and reputation, ensuring security, compliance, and business continuity.

It involves understanding the risks these third parties introduce (like cyber threats, compliance failures, or operational disruptions) and implementing controls to manage them, often extending to "fourth parties" (vendors' vendors).

What Are the Benefits of Third-Party Risk Management Software?

Third-party risk management software helps organizations manage vendor risk in a consistent, scalable way. Instead of relying on manual reviews and disconnected tools, it centralizes oversight and strengthens control across the vendor lifecycle.

Key benefits include:

- Centralized risk visibility: Provides a single view of all third-party risks, assessments, and remediation efforts.

- Stronger compliance posture: Supports alignment with regulatory requirements through standardized assessments and documentation.

- Improved efficiency: Automates due diligence, reviews, and reporting to reduce manual effort and review cycles.

- Continuous risk monitoring: Moves oversight beyond periodic reviews to ongoing visibility into vendor risk changes.

- Better decision-making: Delivers timely insights that support informed vendor approvals and risk responses.

- Scalability: Enables consistent risk management across large and growing vendor ecosystems without added complexity.

Why AI Third-Party Risk Must Start With Governance

AI introduces risks that extend beyond traditional vendor management. Third-party models may rely on unknown data sources, introduce bias, or change behavior without notice. Without governance, these risks surface only after a business impact occurs.

By aligning governance with established frameworks such as the NIST AI Risk Management Framework and ISO 42001, organizations create a consistent, defensible model for regulatory compliance and trust, and transparency. This shifts third-party risk management from reactive issue handling to proactive control supported by regulatory compliance tools and compliance automation.

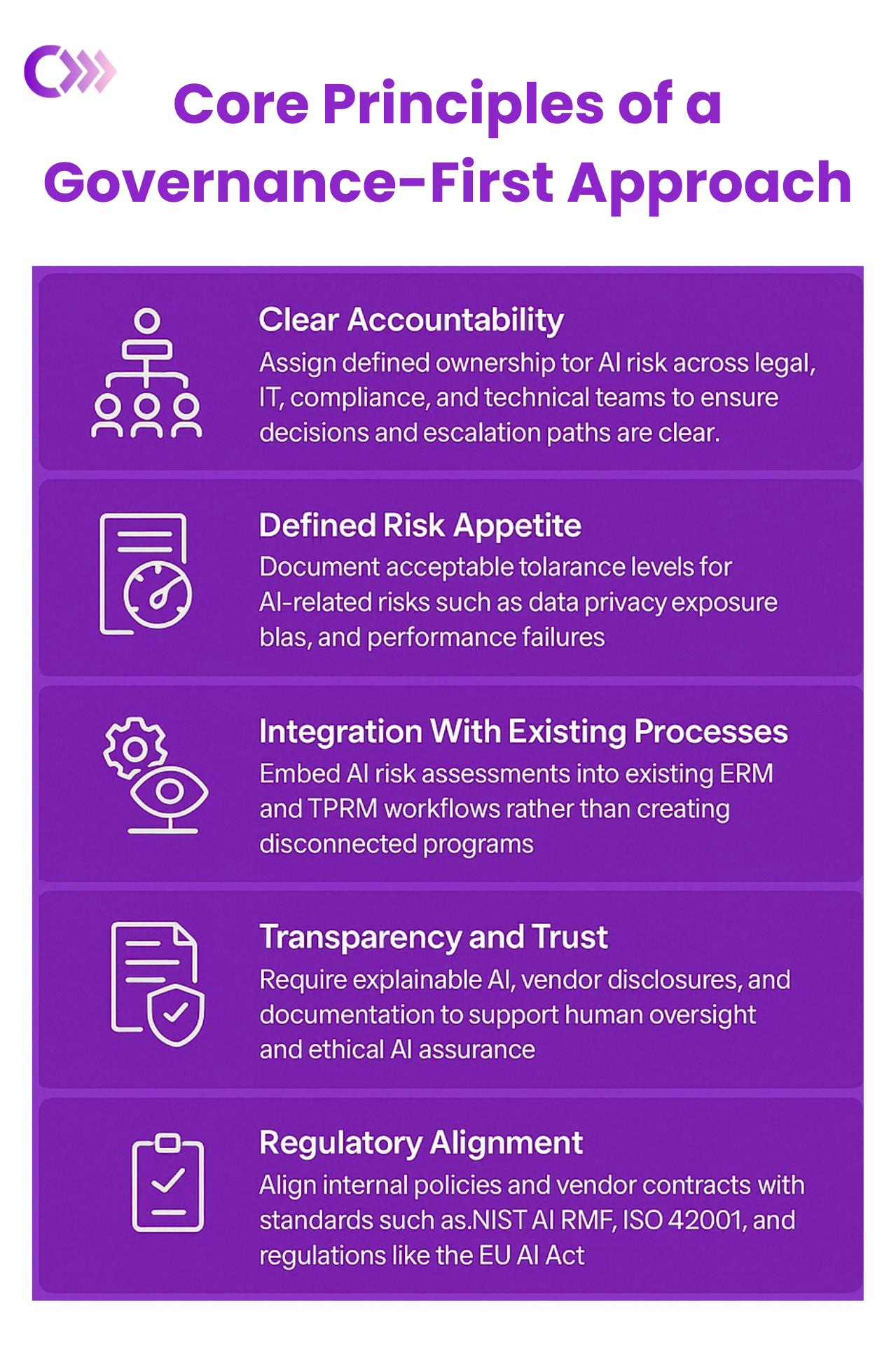

Core Principles of a Governance-First Approach

A governance-first approach to Third-Party Risk Management for AI prioritizes foundational structure over reactive measures. These principles ensure AI adoption across vendor networks is managed proactively, ethically, and compliantly.

By adhering to these principles, organizations establish a stable operating environment that supports innovation while strengthening risk mitigation strategies across third-party AI systems.

Key Areas to Govern in Third-Party AI

Effective third-party AI governance extends beyond traditional IT controls to address AI-specific risks such as bias, opacity, and evolving regulations.

Data Governance and Privacy

- Data quality and integrity: Governing the origin, quality, and completeness of training data is essential for reliable AI outcomes. Poor data directly increases risk.

- Privacy and security: Sensitive data used by third parties must be protected through encryption, anonymization, and access controls to support data privacy governance and regulatory compliance.

- Data usage policies: Contracts must clearly define how vendors can use organizational data, including restrictions on model training and retention.

Model Ethics, Fairness, and Transparency

- Bias mitigation: Regular bias testing and fairness audits reduce discriminatory outcomes in high-impact use cases.

- Explainability: Vendors should provide clear documentation, such as model cards, to address black-box decision risks.

- Human oversight: Human-in-the-loop processes must be enforced where AI decisions carry legal, financial, or reputational impact.

Security and Resilience

- AI-specific cybersecurity: Governance must address threats such as adversarial attacks, data poisoning, and model theft as part of supply chain security.

- Incident response: Joint response plans with vendors ensure faster containment and accountability during AI failures or data breaches.

- Operational resilience: Third-party AI systems must support business continuity without introducing single points of failure.

Compliance and Accountability

- Regulatory alignment: Continuous alignment with evolving AI regulations and standards is essential for defensible compliance.

- Contractual safeguards: AI-specific clauses should define liability, audit rights, performance expectations, and breach notifications.

- Auditable traceability: End-to-end documentation across the AI lifecycle supports audits, investigations, and regulator reviews.

How AI Is Changing Third-Party Risk Management

As vendor ecosystems expand, manual risk reviews no longer scale. AI enables faster, continuous, and more consistent oversight of third-party risk.

- Automated due diligence: AI analyzes vendor contracts, security reports, and compliance documents quickly, reducing manual effort and review time.

- Continuous monitoring: Risk oversight moves from periodic assessments to real-time visibility across vendor behavior and controls.

- Predictive risk detection: Machine learning identifies early risk signals before issues escalate into incidents.

- Scalable vendor oversight: AI enables consistent risk evaluation across large and growing vendor ecosystems without added operational burden.

- Improved decision support: Risk teams gain timely insights that support faster, more informed decisions.

Practical Steps to Get Started With AI Third-Party Risk Management

Moving from strategy to execution requires a clear starting point. These practical steps help organizations translate governance principles into actionable third-party risk controls.

- Assess current maturity: Review existing third-party risk processes to identify gaps in AI governance, visibility, and ownership.

- Align with proven frameworks: Use standards such as NIST AI RMF or ISO 42001 to structure AI assessments, policies, and controls.

- Use integrated risk platforms: Support vendor onboarding, continuous monitoring, and offboarding with consistent governance.

- Commit to continuous improvement: Adapt governance practices as AI technology and regulations evolve.

Credo AI Adds Structure and Accountability to AI Third-Party Risk

Credo AI helps organizations govern third-party AI with clear ownership and consistent controls. It replaces fragmented reviews with a structured approach that embeds governance into vendor evaluation and oversight.

By aligning policies, risk thresholds, and responsibilities across teams, Credo AI ensures AI risk is managed consistently and without silos. Continuous monitoring and standards-based alignment provide ongoing visibility as AI systems evolve.

The result is controlled, accountable third-party AI adoption without slowing innovation.

Wrapping Up

AI adoption continues to accelerate, but unmanaged third-party risk compounds just as quickly. A governance-first approach ensures organizations maintain control, accountability, and compliance as AI systems scale across vendor ecosystems.

By putting structure in place early and sustaining oversight over time, third-party risk management becomes a disciplined, defensible process that supports trusted AI use rather than reacting to failure.

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.