AI regulations are no longer just a talking point; they’re becoming real, enforceable, and fast-moving as we head into 2026. For enterprises working with AI at scale, this shift has direct implications for everything from data use to model oversight and AI and data governance.

In 2024 alone, U.S. federal agencies introduced 59 AI-related regulations, more than double the year before, while legislative mentions of AI rose across 75 countries. At the same time, 78% of organizations reported using AI, up sharply from 55% in 2023.

Whether you're navigating the EU AI Act, U.S. agency guidance, or fast-emerging frameworks in Asia, the pace of change demands attention. This update breaks down what’s new, what matters most, and what your teams should be preparing for.

Let’s get into it.

Key Takeaway

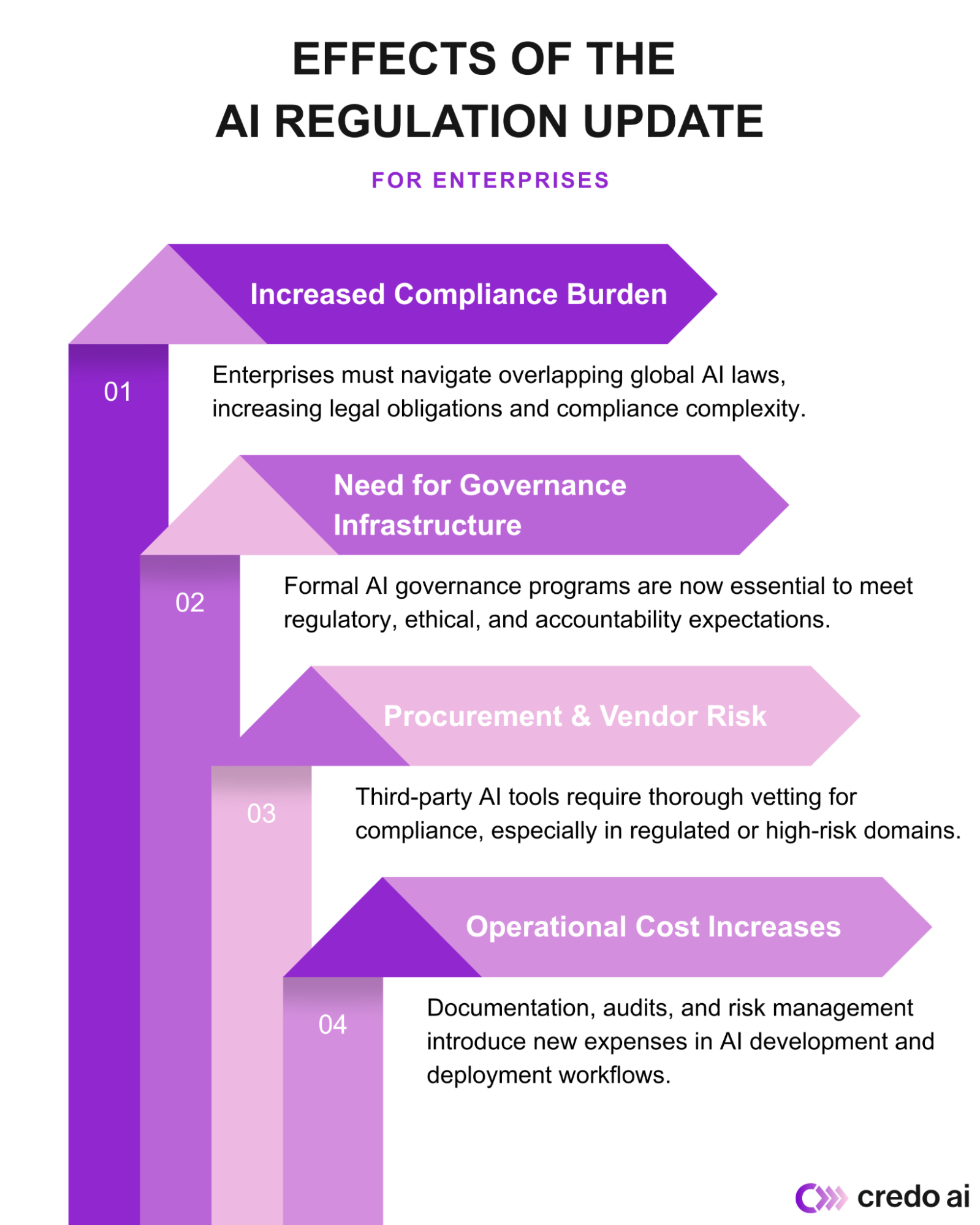

- AI regulation is becoming operational as enforcement accelerates globally: Enterprises must treat compliance as part of AI system design, not a downstream legal task.

- Global frameworks are converging unevenly: The EU AI Act is setting expectations, while U.S. federal and state rules continue to evolve in parallel.

- Fragmented regulation increases enterprise risk: Overlapping requirements across jurisdictions raise compliance costs and operational complexity.

- Proactive AI governance supports regulatory readiness: Clear ownership, documentation, and monitoring allow teams to scale AI use while staying aligned with emerging rules.

What’s Driving the Latest Wave of AI Regulation?

The regulatory acceleration isn’t happening in a vacuum. Governments are responding to real gaps between AI adoption and governance maturity, particularly as general-purpose and generative AI systems move into core business processes.

For enterprises, these drivers translate into new accountability expectations rather than abstract policy goals.

Key Drivers:

- Safety & Security: Fears of misuse (e.g., bioweapons, AI-driven fraud, infrastructure vulnerabilities) are prompting calls for stronger testing and accountability.

- Privacy & Bias: Public concern over surveillance, data misuse, and algorithmic discrimination is fueling ethical debates and bans on high-risk applications.

- National Security & Geopolitics: Countries view AI as a critical asset in global competition, driving investments and policies to secure leadership.

- Economic & Workforce Impact: AI's influence on labor markets and productivity is pushing governments to manage transitions and reduce inequality.

- Existential Risk: Expert warnings about uncontrolled AI scenarios are spurring interest in global safety frameworks.

- Generative AI Surge: The rise of tools like ChatGPT has exposed regulatory blind spots, accelerating policy development.

Approaches vary: The EU AI Act applies a risk-based framework, while the U.S. leans toward innovation-focused strategies. Meanwhile, U.S. states are creating their own patchwork of laws, intensifying the need for coordination.

Federal vs. State: Can the U.S. Avoid a Fragmented AI Governance

The absence of comprehensive federal AI legislation has opened the door for states to take the lead, creating a fragmented regulatory environment that’s increasingly difficult for enterprises to navigate.

In 2024, over a dozen U.S. states introduced or passed AI-related bills, addressing issues such as algorithmic hiring, deepfake disclosure, and consumer protection. For example, New York has proposed rules targeting automated employment decision tools, while California is advancing transparency mandates for generative AI.

At the federal level, the White House has issued executive orders and guidance, but Congress has yet to pass binding legislation. This gap leaves agencies like the FTC, NIST, and the Department of Commerce to interpret AI regulatory compliance within their existing mandates, without a unified legal framework.

The result:

- Overlapping and sometimes conflicting rules

- Increased compliance costs for companies operating across states

- Legal uncertainty around enforcement standards

There is growing pressure for Congress to act, especially as state laws begin to diverge significantly in scope and definitions. A federal baseline could streamline compliance and reduce risk, but until then, enterprises must prepare for a multi-jurisdictional regulatory landscape.

How Global AI Regulations Compare, and What the U.S. Can Learn

Globally, AI and data governance vary across regions, with major economies taking distinct approaches shaped by political systems, legal traditions, and societal priorities.

Comparative Approaches:

- European Union (EU)

Approach: Comprehensive, risk-based, and extraterritorial

Focus: Protecting fundamental rights, prohibiting certain use cases (e.g., social scoring), and regulating high-risk systems

Key Law: EU AI Act - Recent updates to the EU AI Act introduce clearer obligations for general-purpose models and foundation model transparency requirements.

- United States (U.S.)

Approach: Fragmented and innovation-focused, with a mix of executive actions, agency guidance, and state laws

Focus: Encouraging innovation, national security, and sector-specific governance (e.g., finance, healthcare)

Key Actions: AI Executive Order, Blueprint for an AI Bill of Rights, state-level laws (e.g., New York, Illinois)

- China

Approach: State-centric, domain-specific

Focus: Content control, social stability, and centralized regulation of information flow

What the U.S. Can Learn:

- Adopt clearer transparency and disclosure requirements for AI systems, particularly in high-risk areas

- Strengthen accountability and human oversight to ensure responsible development

- Balance innovation with ethical safeguards to address bias and protect rights

- Establish consistent rules for foundation model testing and deployment

- Move toward a unified federal framework to reduce fragmentation and build trust

What’s Next in the White House’s Effort to Centralize AI Regulation

A major development came with the White House executive order issued on December 11, 2025, signaling a stronger move toward federal coordination of AI governance.

The order explicitly addresses the risks of a fragmented, state-by-state approach and outlines mechanisms to challenge state laws viewed as conflicting with national AI policy.

Key provisions include:

- Creation of an AI Litigation Task Force to dispute state-level AI laws that conflict with federal objectives, including concerns over free speech and interstate commerce.

- Assessment of state laws by the Department of Commerce to flag those considered overly restrictive or incompatible with the federal framework.

- Potential loss of federal funding for states with conflicting AI regulations.

- Development of federal disclosure standards to override varying state-level transparency requirements.

- Legislative recommendations for a consistent national AI policy, with narrow carveouts for state-level interests (e.g., child safety, state procurement).

While the order directly critiques Colorado’s AI law, many other state regulations remain in a legal gray area. With no bipartisan AI legislation yet passed by Congress, legal challenges and political resistance are likely.

For enterprises, this environment reinforces the importance of AI risk management and compliance planning across jurisdictions, especially as U.S. AI regulations continue to evolve through executive action and agency enforcement.

For organizations building or deploying AI systems, aligning with global regulatory frameworks requires more than policy awareness; it demands operational tools. Credo AI enables teams to assess, monitor, and govern AI in line with emerging rules and best practices, helping organizations scale compliance automation across AI lifecycle workflows.

Final Takeaways for Enterprise AI Leaders

As 2026 approaches, the regulatory environment for AI is becoming more complex and more consequential. With global frameworks maturing and the U.S. federal government asserting greater control, enterprises must navigate competing standards, evolving definitions, and legal uncertainty.

Staying aligned with core principles, transparency, accountability, and responsible AI deployment will be key. While federal clarity may emerge, proactive governance frameworks and cross-border awareness are essential. Tracking developments closely is no longer optional; it’s foundational to long-term success in the AI-driven economy.

FAQs on AI Regulation Update

1. How should enterprises prepare for AI audits or regulatory inquiries in 2026?

Maintain detailed documentation of model development, risk assessments, and governance policies. Align internal processes with frameworks like NIST AI RMF or the EU AI Act to ensure readiness for regulatory review.

2. Will open-source AI models face the same regulatory scrutiny as proprietary systems?

Yes, in many cases. If open models are used in high-risk applications, they may still trigger compliance obligations, especially under the EU AI Act or sector-specific U.S. regulations.

3. How are multinational companies managing AI compliance across jurisdictions?

Most are aligning with the strictest standard, typically the EU AI Act, to simplify compliance. Cross-border governance teams are coordinating legal, technical, and ethical oversight to stay ahead of diverse requirements.

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.