If you're evaluating AI governance solutions, you've probably noticed that your existing Governance, Risk, and Compliance (GRC) platform (whether it's OneTrust, ServiceNow, or another enterprise workflow tool) now offers an "AI governance module." It seems convenient. Your teams already know the platform. Procurement is easier. But here's what you need to know: adding AI governance as a module to a traditional GRC tool is fundamentally different from using a purpose-built AI governance platform.

Most enterprises are now using AI in ways that traditional governance tools were never built to handle. As teams roll out more LLMs, GenAI apps, and automated systems, they suddenly need stronger AI Risk Management and Trusted AI controls, because things move fast and the stakes are high.

New regulations like the EU AI Act make this even more important, since they expect continuous monitoring and clear documentation. That’s why a lot of companies are realizing there’s a real difference between GRC vs AI Governance, and why a simple add-on module isn’t enough anymore. They need platforms that are actually designed for the realities of modern AI.

The Manual Work Trap

Traditional GRC tools weren't designed with AI development workflows in mind. They lack native integrations to the technical tools your data science and ML engineering teams actually use (think MLflow, SageMaker, Databricks, or your model registries). This creates an immediate problem: your technical teams have to manually bridge the gap between their development environment and your governance requirements.

Across the industry, we see AI teams spending hours each week on governance busy work that should be automated. What does this look like in practice? Engineers filling out forms. Data scientists copy model cards into different systems. Manual uploads of evaluation results. This isn't just inefficient; it's unsustainable as your AI program scales from dozens to hundreds of models, and as regulatory requirements like the EU AI Act demand more rigorous documentation and controls.

An EY survey highlights the same issue: 72% of organizations have scaled AI, but only one-third have implemented Trusted controls.

Why the gap?

- Traditional GRC tools cannot capture technical data, such as model lineage or evaluation results.

- Without this, teams can’t maintain real AI Risk Management or meet EU AI Act expectations.

This is why enterprises are turning to purpose-built AI Governance Platforms that connect directly to their Model Registry and ML tools.

OneTrust vs Credo AI | ServiceNow vs Credo AI

When "Highly Configurable" Means "Your Problem Now"

Generic workflow tools tout their configurability as a strength. But when it comes to AI governance, configurability often translates to "you'll need to build it yourself." AI governance has unique requirements: model lineage tracking, bias evaluation workflows, technical documentation that evolves with model versions, and risk assessments that differ fundamentally from IT security assessments.

Yes, you can configure a traditional GRC tool to handle these workflows. But you'll likely find yourself either wrestling with platform limitations or, worse, adapting your governance framework to fit what the tool can do rather than what you actually need.

We've seen this play out at major enterprises. At a leading IT and networking company, teams started with OneTrust for AI assessments but quickly found themselves working across disparate systems (assessments in one tool, other governance work in spreadsheets), creating exactly the kind of fragmented workflow that governance tools are supposed to eliminate.

The result wasn’t just extra work; it created lack of visibility. Without a single system enforcing Trusted AI standards or applying consistent GenAI Risk Controls, different teams ended up following different processes. It also meant they couldn’t support dependable LLM Governance workflows, like tracking how model behavior changed over time or evaluating prompts in a consistent way.

The Module vs. Platform Question

There's a deeper issue here: when AI governance is a module among many, you're not getting deep domain expertise baked into the product. You're getting a generic framework that's been adapted for AI.

Think about what that means for your governance program and ask yourself:

- Are the workflows designed by AI governance experts or GRC generalists?

- Does the tool reflect current best practices in trusted AI?

- Can it adapt as regulations like the EU AI Act evolve?

- Is the vendor's product roadmap driven by AI governance needs or by the dozens of other modules they support?

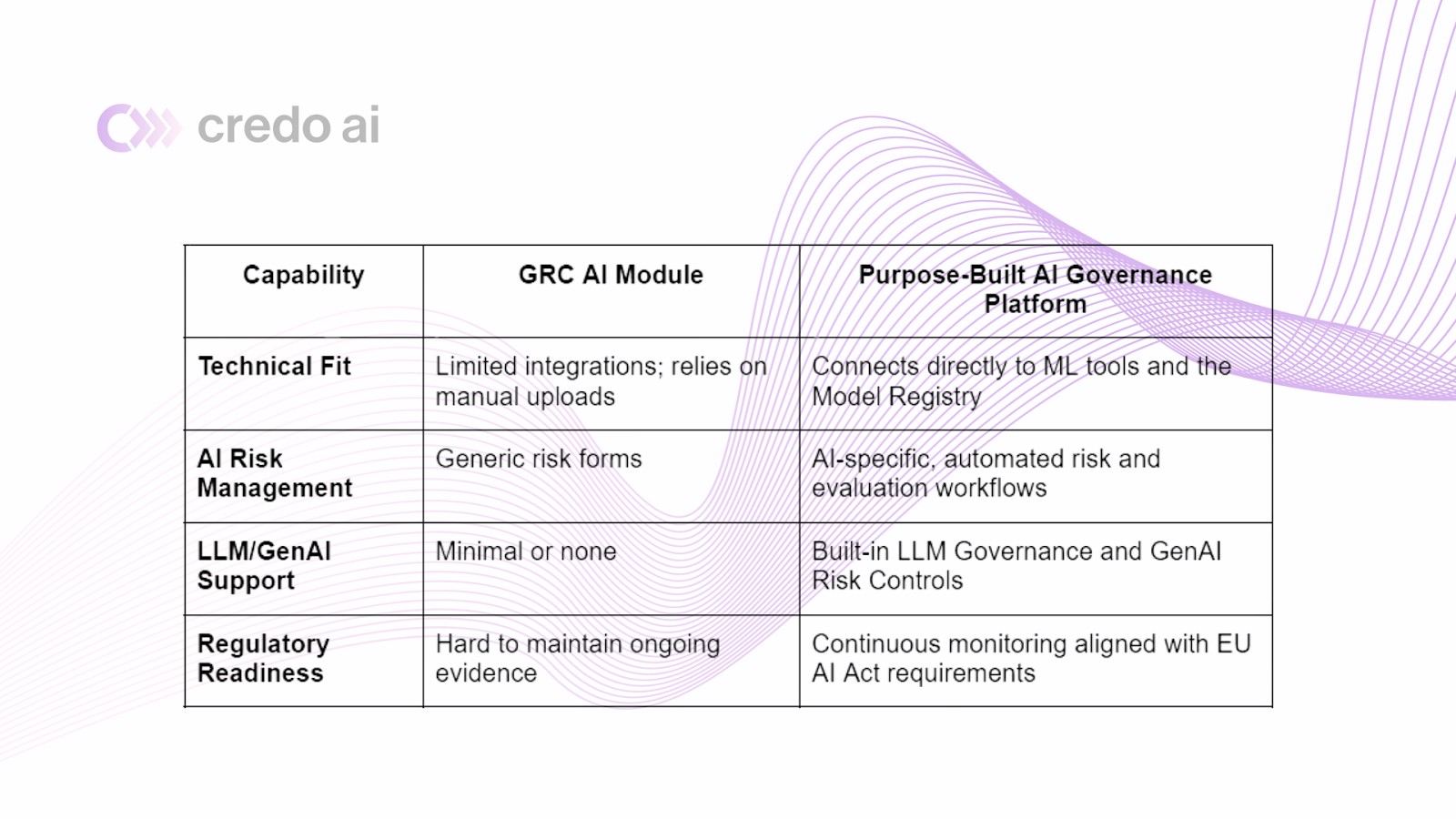

To make the distinction clearer, here are the differences that really matter when you compare a GRC module with a purpose-built AI governance platform:

What Actually Matters in Your AI Governance Tooling

Before you default to your incumbent vendor, ask yourself:

- Can your technical teams integrate this into their actual workflows without manual overhead?

- Does this tool reflect how AI governance actually works, or are you adapting your processes to fit the tool?

- When you need to scale from 10 models to 100 to 1,000, will this approach still work?

- Is the vendor's expertise in AI governance specifically, or in enterprise workflows generally?

The promise of AI governance tools is to make trusted AI scalable and systematic. But if your tool creates more manual work, requires your teams to work across multiple systems, or forces you to compromise on your governance framework, it's not actually solving the problem. It's just adding another layer of process.

If you're evaluating alternatives to traditional GRC tools, see how Credo AI compares to OneTrust and ServiceNow for purpose-built AI governance that scales with your enterprise needs.

Moving Toward Better AI Governance

AI isn’t slowing down, and your governance tooling has to keep pace. When models update daily, and LLM behavior shifts overnight, you need a system built for that kind of change, not a module that struggles to keep up. A purpose-built approach makes ongoing oversight, version tracking, and evidence collection far easier for your teams.

If you want to learn how other organizations are approaching AI governance, explore the Credo AI Resources Hub for webinars, case studies, and practical guides.

Frequently asked questions

Why do we need an AI governance framework?

An AI governance framework ensures AI systems are used responsibly, operate reliably, and comply with regulations. It provides structure for assessing risks, documenting decisions, managing model behavior, and maintaining accountability and trust as AI scales across an organization.

What are the three critical pillars necessary for an AI governance solution?

An effective AI governance solution needs:

1. Clear policies and standards that define how AI should be built and used,

2. Technical oversight for monitoring models and risks, and

3. Operational workflows that ensure consistent adoption across teams.

What is the role of a Model Registry in AI governance?

A Model Registry tracks model versions, ownership, deployment status, and performance history. It creates a single source of truth that supports traceability, risk evaluations, and audit requirements, making governance more reliable and easier to scale.

How is AI governance different from traditional IT governance?

AI governance focuses on model behavior, data quality, fairness, and ongoing performance, while IT governance centers on infrastructure, security, and system availability. AI systems evolve constantly, requiring continuous monitoring and controls that traditional IT governance frameworks don’t provide.

DISCLAIMER. The information we provide here is for informational purposes only and is not intended in any way to represent legal advice or a legal opinion that you can rely on. It is your sole responsibility to consult an attorney to resolve any legal issues related to this information.